In my previous post, I discussed how my approach to keeping book notes in Obsidian tries to leverage the “mo-connections-mo-retention” aspect of our brain. That idea however, is heavily dependent on how we make connections while taking or making notes. Connecting the dots between notes is a “manual” process. One would argue “connecting” is “synthesis” and it needs to be manual and thought out, but surely we can find a better way 😉

Connecting notes

I am writing down three methods you can use to find connections between notes. I use a combination of these three but I feel there’s a smarter approach lurking around somewhere.

- Memory – recall something that is connecting to something that you’re working on right now (can be highly erratic based on how well you remember your previous notes)

- Unlinked mentions – you can open up the unlinked mentions of the note to see all the other notes where this note is mentioned but not connected. This relies on keywords in your title (it will literally look for your title in other notes) and is non-fuzzy (for good reason). I use the aliases in the frontmatter so the search for unlinked mentioned includes the aliases (so your note titled “How to Argue” with aliases “Forming an argument” or “Argumentative Indians” can have a higher chance of being linked elsewhere) but even that is bound by how creative you can get while assigning aliases to your notes.

- Local graph – visually seek nearby items or items that are connected on nth degree

The right way to do this would be to leverage the fast growing Artificial Intelligence or Semantic Search algorithms to help us connect the dots that aren’t easily visible.

Enter Open AI embeddings

OpenAI is an AI research lab that is pushing out a lot of ready to use AI models that you can use in your applications. Think things like classifications, semantic search, completions, etc.

An “embedding” is a vector representation (in simple terms it is a list of numbers) of a piece of text’s semantic representation across thousands of parameters (think parameters like category, sub category, genre, gender, voice, etc ). The closer the distance between two vectors, the more semantically close they are to each other.

To use OpenAI’s models you need to signup to their waitlist. I recently got in and have been blown away by the possibilities. In the rest of this post, I deep dive into the code that I used to run OpenAI’s embeddings system on my notes to find non-obvious connections between my notes.

For the purpose of this exercise, I stuck to finding connections between the book notes of my vault which also helped keep the costs down on OpenAI (you get $18 of free credits to get you started and I intend to stretch it as long as I humanely can 😁).

The setup

This is going to be a simple 4 step process

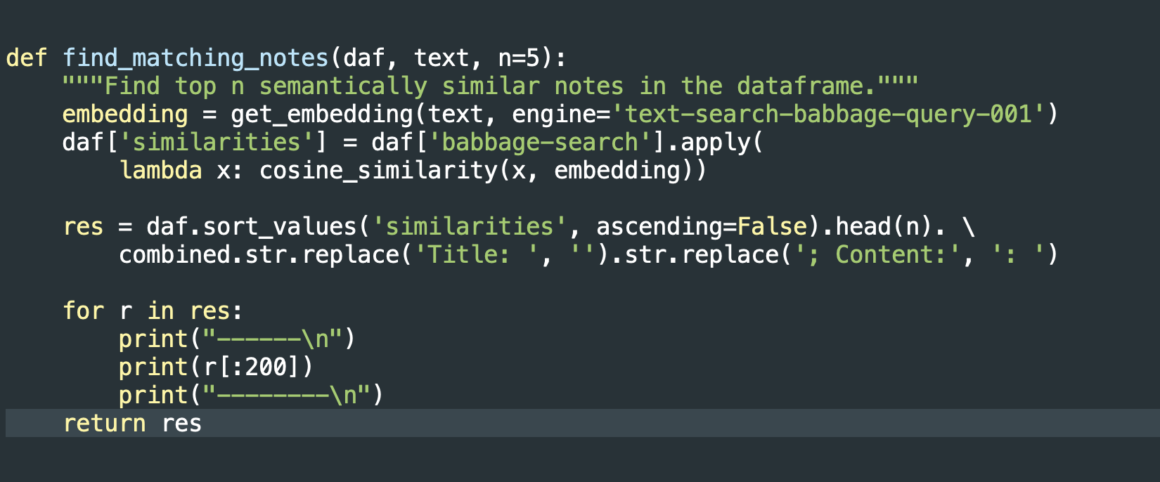

- Load up all the notes in a Pandas DataFrame to make it easy to run the computations (also easy to load up and dump data in .CSV format)

- Run embeddings on my notes to generate their vector representations and them dump into another .CSV file

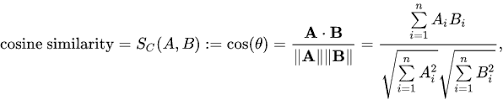

- Find the top 5 semantically similar notes by using an algorithm called cosine similarity (distance between two vectors)

The results

The long and short of it is I was able to connect ideas I didn’t originally think were related. My notes on In group bias found to be connected to Echo chambers, notes on Tunnel vision connected with First Principles, Implementation Intention with Commitment and Consistency bias and so on. Some of these seem super obvious now but I given had over 700 notes across 55 books, I wasn’t able to connect and synthesise them when i was originally working on them.

My sense is a semantically capable AI can act as a wingman of sorts while you make notes. I am way too occupied in my day to day responsibilities to work on an Obsidian plugin but if someone’s interested they are welcome to use my rough code as a foundation. Here’s the github repo. Happy Hacking!

I run a startup called Harmonize. We are hiring and if you’re looking for an exciting startup journey, please write to jobs@harmonizehq.com. Apart from this blog, I tweet about startup life and practical wisdom in books.