Algorithms to live by is the first book that I read again, right after finishing it. It is filled with practical wisdom and connects real life problems with computing and mathematical theory. In this post and the next, I will try to summarise my key takeaways from the book.

Knowing when to stop

We encounter scenarios in life when we don’t know when is the right time to stop looking for solutions. Think situations like driving through a parking lane while looking for a spot. If you stop and park at the first empty stop, you may have to walk a long way but if you keep looking for a stop closer to the destination, you might miss out on a spot altogether. Mathematically speaking, these situations are called optimal stopping problems. The optimal solution of this problem dictates that you can stop after having looked at 37% of your available options and then go with when you find an option that beats all the others you’ve seen before.

For the first 37% of the time or options, you’re only looking and gathering information (creating a baseline). In some cases the available options could be in the form of time you’ve set to look for a parking. So if you plan on spending 10 mins looking for a spot, spend the first 4 minutes simply exploring the lot and then pick the first one that beats all the previous spots (beat here means matches your criteria best).

Balancing searching and sorting

Sorting is arranging items in a certain predefined order so it becomes easier to find a specific item. Like with anything, sorting takes effort and time and this time is well spent if we eventually use the sorted dataset to search for an item. In that sense, sorting and searching are tradeoffs on time. Which algorithm to employ to organise your files, wardrobe or pictures, depends on your specific retrieval needs and it makes sense to spend time thinking about organisation as it will save you time in the long run.

Similarly, in nature, a pecking order can be thought of as a sorting algorith. The objective of this ordering is to make it effortless (read: bloodless) to determine who is dominant in any given situation. Even basic societal rules like respecting your elders, nature’s hierarchies in the wild, basic maritime laws like gross tonnage, etc, are ways of managing the same tradeoff of sort v search.

Caching and memory

Just as sorting allows for faster search, our memory is dependent on how information is organised so it can aid faster retrieval. We have infinite capacity for storing memories but can only spend a finite time searching for memories. Forgetting something, is thus, similar to a cache, an organisation problem 🤯

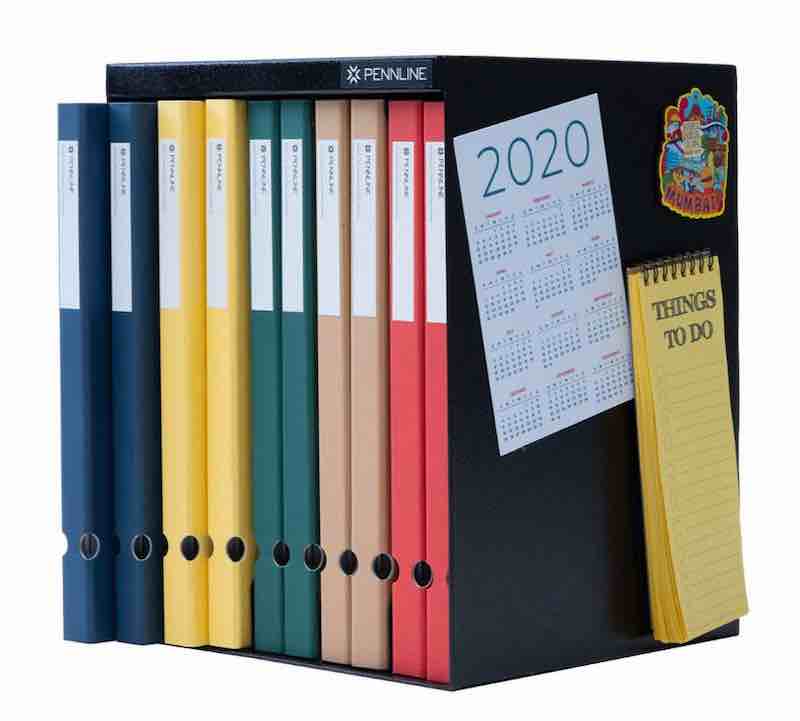

File used recently goes to the leftmost slot

File used recently goes to the leftmost slot

Something recently used is more likely to be used again and thus must be kept closest to us. The weird looking filing trays we see in some offices, may actually be a smart way to prioritise stuff. The file you used most recently goes back to the leftmost tray and the other ones slide right. That’s it. That’s the algorithm right there.

Since you are likely to need something you used most recently, always go for the left most tray to look for it. This is the Noguchi filing system and it is a real world physical adaptation of the least recently used cache.

Scheduling in real life

There are many different metrics to optimise when it comes to what we should work on. Sometimes the goal is to minimise overall lateness (unit - time, like in case of managing your washer and dryer), sometimes it is to minimise the number of late tasks (unit - number, consuming rotting produce in the fridge in some order) and sometimes it is to reduce the overall waiting time for someone blocked on us (clients who have given us several tasks). Each metric demands its own strategy. The goal must be to balance the different kinds of metrics. For example responsiveness and throughput. Your customer support team may need to be responsive so that your engineers can have throughput and so on.

Sometimes it may just be prudent to get the easiest thing done and out of the way and sometimes it may make sense to spend time to pick the most consequential item and do it first (to reduce the wait of the task with the highest importance/time ). This sounds like a good one size fit-most approach for most scheduling problems.

A common problem emanating from this is thrashing. There is always a fixed bookkeeping cost, no matter what kinds of tasks you have on your plate. When a juggler is given one more ball than he can manage, he doesn’t just drop that ball but all other balls. When tasks steal resources from each other or remove the meta work done by another task to do its own meta work, it is called Thrashing.

Another important concept that comes in handy is interrupt coalescing. This is when you wait for similar tasks to accumulate and do them all at once to save time on bookkeeping each individual task of the same kind. It also saves context switching, which is the time and energy that gets spent on moving from one kind of task to another. Imagine getting interrupted to answer a question while you’re in the middle of writing an email to placate a customer. It takes time to think of the answer, actually answer and then get back to writing the email. The idea therefore must be to minimise interruptions, so you can get all the tasks of one kind done in one go and then move on to the next kind.

I run a startup called Harmonize. We are hiring and if you’re looking for an exciting startup journey, please write to jobs@harmonizehq.com. Apart from this blog, I tweet about startup life and practical wisdom in books.